An Emerging Cyber Security Threat: The Growing Threat of AI Employees, Insider Social Engineering, and Corporate Exploitation

The call came on a Tuesday morning that would forever change how one CEO viewed artificial intelligence, cybersecurity, and corporate security. Sarah, the Chief People Officer at a major technology firm, had requested an urgent meeting with their CEO, who we’ll refer to as Michael. What she revealed in that conference room would expose a vulnerability so profound that it challenged everything they thought they knew about insider threats.

"Michael," Sarah began, her voice trembling slightly, "I need to tell you something that's going to sound impossible, and I’m a little embarrassed to tell you, but I have a responsibility to do so. We've unknowingly had five people on our payroll who aren’t real for the past two weeks."

The silence that followed was deafening. Michael, a seasoned executive who had navigated countless crises, found himself speechless. The implications began cascading through his mind like dominoes falling in slow motion. How many "employees" were actually sophisticated AI systems? What data had they accessed? What damage had already been done?

The names in this example are fake, but the story is real and the CEO is someone who I’ve known personally for over a decade.

This wasn't a scene from a dystopian thriller. It was a real conversation and it’s one that is happening in boardrooms around the world as companies grapple with an emerging threat that traditional cybersecurity frameworks never anticipated. The age of AI employees has arrived. The risk isn’t payroll fraud… it’s much greater. With it comes a new category of insider threat that exploits the very foundation of organizational trust: the assumption that the person you hired is actually a person.

Timothy Youngblood, CISO at Astrix Security and former CISO at McDonald's, recently observed this shift. "We are moving from AI as an efficiency tool to AI making autonomous security decisions. That shift is both powerful and risky. The future of cyber leadership will be about striking the right balance — trusting AI while maintaining human oversight" [1]. But what happens when that AI isn't working for you—it's working against you?

The statistics paint a sobering picture of our current vulnerability. According to Gartner's latest research, by 2028, globally one in four job candidates will be fake. This trend is driven largely by AI-generated profiles [2]. Meanwhile, 83% of organizations reported at least one insider attack in 2024. The average cost of insider threats reached $17.4 million annually—a figure that continues to climb as these attacks become more sophisticated [3][4].

This article examines the emerging threat of AI-powered "ghost employees." These are digital agents with fabricated identities that successfully infiltrate corporate workforces through sophisticated deepfake technology and social engineering. Unlike traditional external cyberattacks that attempt to breach perimeter defenses, these AI employees walk through the front door. They arrive with valid credentials, legitimate access, and the implicit trust that comes with being an "insider."

For corporate innovators, startup founders, and cybersecurity leaders, understanding this threat isn't just about defense. It's about survival in an era where the line between human and artificial intelligence has become dangerously blurred. The call isn't just coming from inside the house anymore. The call might be coming from something that was never human to begin with.

Defining AI Employees and the New Attack Vector

To understand the magnitude of this emerging threat, we must first distinguish between legitimate AI employees and malicious ones. Legitimate AI employees are the digital assistants and automated systems companies intentionally deploy. Malicious AI employees are sophisticated digital agents with fabricated identities that infiltrate organizations through deception.

Malicious AI employees represent a paradigm shift in cyber warfare. Unlike traditional external threats that attempt to breach firewalls, steal credentials, or exploit software vulnerabilities, these digital infiltrators exploit the most fundamental weakness in any security system: human trust. They don't hack their way in—they apply for jobs, interview successfully, and get hired through normal HR processes.

The technology enabling this threat has reached a tipping point of sophistication and accessibility. Modern generative AI can create convincing résumés, fabricate employment histories, generate professional headshots, and even conduct real-time video interviews using deepfake technology. Vijay Balasubramaniyan, CEO of Pindrop Security, recently warned about this development. "Gen AI has blurred the line between what it is to be human and what it means to be machine" [5].

The Anatomy of Deception

The creation of a malicious AI employee begins with identity fabrication. Advanced AI systems can generate complete professional personas, including several key components.

Synthetic Identity Creation involves AI-generated names, addresses, and social security numbers that pass basic verification checks. These identities often combine real and fabricated elements. This makes them particularly difficult to detect through traditional background screening processes.

Professional History Generation uses sophisticated language models to create detailed employment histories. These include job descriptions, accomplishments, and references. These histories are often crafted to match specific job requirements. They demonstrate an understanding of industry terminology and best practices that would impress human recruiters.

Visual Representation creates high-quality AI-generated headshots and professional photos that appear authentic to human observers. These images are often created using generative adversarial networks (GANs) trained on millions of real human faces.

Behavioral Modeling allows advanced AI systems to analyze communication patterns from legitimate employees within target organizations. This enables them to mimic writing styles, terminology, and cultural references that make them appear to be genuine cultural fits.

The case of "Ivan X" at Pindrop Security shows how sophisticated these attacks have become. The candidate appeared to have all the right qualifications for a senior engineering role. During the video interview, recruiters noticed that his facial expressions were slightly out of sync with his words. This was a telltale sign of deepfake technology. Further investigation revealed that while Ivan claimed to be located in western Ukraine, his IP address indicated he was actually operating from a possible Russian military facility near the North Korean border [6].

This represents a fundamental evolution from traditional social engineering attacks. Previous insider threats typically involved compromising existing employees or recruiting malicious insiders. AI employees create entirely new identities that exist solely to infiltrate and exploit target organizations.

The Scale of the Problem

The scope of this threat extends far beyond isolated incidents. According to recent research, more than 300 U.S. firms have inadvertently hired impostors with ties to North Korea for IT work. These include major television networks, defense manufacturers, automakers, and Fortune 500 companies [7]. These workers used stolen American identities to apply for remote jobs. They deployed sophisticated techniques to mask their true locations. Ultimately, they sent millions of dollars in wages to North Korea's weapons program.

Ben Sesser, CEO of BrightHire, helps over 300 corporate clients assess prospective employees. He reports that the number of fraudulent job candidates has "ramped up massively" in 2025 [8]. His company's data suggests that the problem is accelerating as AI technology becomes more accessible and sophisticated.

The threat isn't limited to any single industry or geographic region. Lili Infante, founder and CEO of CAT Labs, runs a cybersecurity and cryptocurrency startup. She reports that "every time we list a job posting, we get 100 North Korean spies applying to it" [9]. The targeting is particularly intense for companies in cybersecurity, cryptocurrency, and technology sectors. These are areas where remote work is common and the potential for valuable data theft is high.

Beyond Traditional Threat Models

What makes AI employees particularly dangerous is their ability to operate outside traditional threat detection models. Most cybersecurity frameworks are designed to detect anomalous behavior from known entities. They look for unusual login patterns, suspicious data access, or communications with external threat actors. AI employees, however, are designed to appear normal from the moment they're hired.

They don't need to steal credentials because they have legitimate access. They don't need to bypass security controls because they're authorized users. They don't need to hide their activities because their behavior is designed to mimic that of productive employees. Dr. Larry Ponemon, founder of the Ponemon Institute, observes this challenge. "Insider threats often cost more than external attacks—because insiders know how to hide it" [10].

This represents a fundamental challenge to the zero-trust security model that many organizations have adopted. Zero-trust architectures are built on the principle of "never trust, always verify." However, they still rely on initial identity verification during the hiring process. Once that verification is complete and access is granted, the system assumes the user is legitimate. AI employees exploit this assumption by successfully passing the initial verification process through sophisticated deception.

The implications extend beyond immediate security concerns. These AI employees can gather intelligence about internal processes. They can identify valuable targets for future attacks. They can even recruit human employees for espionage activities. They represent a new category of advanced persistent threat (APT) that operates not through technical exploitation, but through social and organizational infiltration.

Anatomy of the AI Employee Scam

Understanding how AI employee infiltration works requires examining the sophisticated multi-stage process that transforms artificial intelligence into convincing human imposters. This isn't a simple case of submitting fake résumés. It's a carefully orchestrated campaign that exploits every stage of the modern hiring process.

Stage 1: Target Selection and Intelligence Gathering

The attack begins long before any job application is submitted. Sophisticated threat actors conduct extensive reconnaissance on target organizations. They analyze everything from company culture and communication styles to specific job requirements and hiring practices.

Organizational Profiling involves attackers studying target companies through public sources. They examine LinkedIn profiles, company websites, press releases, and social media posts. They identify key personnel, understand reporting structures, and analyze the language and terminology commonly used within the organization.

Job Market Analysis sees threat actors monitoring job postings not just for immediate opportunities, but to understand hiring patterns, required skills, and compensation ranges. This intelligence helps them create profiles that are perfectly tailored to organizational needs.

Cultural Intelligence uses advanced AI systems to analyze publicly available communications from target organizations. They study blog posts, social media content, and even employee reviews on sites like Glassdoor. This helps them understand company culture and values. This allows them to craft personas that appear to be ideal cultural fits.

Stage 2: Identity Creation and Backstory Development

Once a target is selected, the process of creating a convincing artificial identity begins. This stage leverages multiple AI technologies working in concert to create a comprehensive digital persona.

Synthetic Identity Generation sees advanced AI systems create complete identities using a combination of real and fabricated information. These identities often use legitimate social security numbers obtained through data breaches. They combine these with AI-generated names and addresses. The result is an identity that passes basic verification checks while being completely controlled by the threat actor.

Professional History Fabrication uses large language models to generate detailed employment histories. These are specifically tailored to target job requirements. These histories include not just job titles and dates, but detailed descriptions of accomplishments, projects, and responsibilities. They demonstrate a deep understanding of industry practices.

Consider this example of an AI-generated professional summary for a cybersecurity role:

"Experienced cybersecurity analyst with 7 years of experience implementing zero-trust architectures and managing SOC operations. Led incident response for a Fortune 500 financial services company during a sophisticated APT campaign, reducing mean time to detection by 40% through implementation of behavioral analytics and threat hunting protocols. Certified in CISSP, GCIH, and AWS Security Specialty."

This summary demonstrates technical knowledge, uses appropriate industry terminology, and includes specific metrics that would appeal to hiring managers. All of this was generated by AI systems trained on thousands of legitimate cybersecurity professional profiles.

Reference Network Creation involves sophisticated operations creating entire networks of fake references. These come complete with phone numbers and email addresses that are answered by AI voice systems or confederates. These references are prepared with detailed backstories about their "relationship" with the candidate. They can provide convincing testimonials during reference checks.

Stage 3: Visual Identity and Deepfake Preparation

The visual component of AI employee creation has become increasingly sophisticated. It leverages cutting-edge deepfake technology to create convincing video personas.

Synthetic Face Generation uses AI systems to create photorealistic faces of people who don't exist. They use generative adversarial networks (GANs) trained on millions of real human faces. These images are often indistinguishable from real photographs. They can be generated in multiple poses and lighting conditions to create a complete visual identity.

Deepfake Video Preparation involves threat actors preparing deepfake systems for video interviews. These systems can generate real-time video of their synthetic identity. These systems can map facial expressions and mouth movements to match spoken words. This creates the illusion of a live video conversation.

Voice Synthesis uses advanced text-to-speech systems to create unique voice profiles for each AI employee. These voices are often trained on samples of real human speech to create natural-sounding conversation patterns. They include appropriate accents and speech patterns for the claimed geographic location.

Stage 4: Application and Initial Screening

With the identity fully prepared, the AI employee begins the formal application process. This stage demonstrates how thoroughly these systems can mimic human behavior throughout standard hiring procedures.

Automated Application Submission allows AI systems to automatically fill out job applications. They tailor responses to specific job requirements while maintaining consistency with the fabricated identity. These applications often include personalized cover letters that reference specific details about the target company and role.

Initial Screening Responses happen when AI employees are contacted by recruiters. They respond promptly and professionally, demonstrating enthusiasm for the role and deep knowledge of the industry. These communications are often indistinguishable from those of legitimate candidates.

Skills Assessment Completion occurs when AI employees complete coding challenges, technical assessments, and other skills-based evaluations for technical roles. Advanced AI systems can solve complex programming problems, demonstrate knowledge of specific technologies, and even explain their reasoning in follow-up discussions.

Stage 5: The Interview Process

The interview stage represents the most sophisticated aspect of AI employee infiltration. It requires real-time interaction that can expose the artificial nature of the candidate.

Video Interview Execution uses deepfake technology during video interviews to create the illusion of a live conversation. However, as the Pindrop Security case demonstrated, subtle inconsistencies can reveal the deception to trained observers. These include facial expressions being slightly out of sync with speech.

Technical Discussion Navigation prepares AI employees to discuss complex technical topics in real-time. They draw on vast databases of technical knowledge to provide convincing answers to interview questions. They can discuss past projects, explain technical decisions, and even engage in problem-solving exercises.

Behavioral Interview Responses show that AI employees can navigate behavioral interview questions. They provide detailed examples of past experiences (entirely fabricated) that demonstrate desired soft skills and cultural fit.

Stage 6: Background Verification Evasion

Modern AI employee operations have developed sophisticated methods for passing background checks and verification processes.

Document Forgery creates high-quality fake documents, including diplomas, certifications, and previous employment verification letters. These are created using AI-assisted design tools. These documents often include security features and formatting that make them difficult to distinguish from legitimate credentials.

Reference Coordination activates the network of fake references during background checks. AI voice systems or human confederates provide convincing testimonials about the candidate's performance and character.

Digital Footprint Creation supports fabricated identities by creating complete digital footprints retroactively. This includes social media profiles, professional networking accounts, and even traces of past online activity that support their claimed background.

Stage 7: Onboarding and Initial Operations

Once hired, AI employees begin the most dangerous phase of their operation. They gain access to organizational systems and begin intelligence gathering.

System Access Acquisition happens through normal onboarding processes. AI employees gain legitimate access to corporate systems, email, and potentially sensitive data. This access is granted willingly by the organization. This makes it particularly difficult to detect through traditional security monitoring.

Behavioral Mimicry involves AI employees studying the communication patterns and work habits of their colleagues. They adapt their behavior to blend in seamlessly with organizational culture. They participate in meetings, respond to emails, and even engage in casual workplace conversations.

Intelligence Collection begins once legitimate access is established. AI employees systematically gather intelligence about organizational structure, processes, and valuable data assets. This intelligence gathering is often subtle and spread over time to avoid detection.

Stage 8: Exploitation and Damage

The final stage of AI employee operations involves leveraging their insider access to achieve their ultimate objectives.

Data Exfiltration allows AI employees to systematically copy sensitive data, intellectual property, and confidential information. Because they have legitimate access, this activity often doesn't trigger security alerts.

System Compromise uses insider knowledge of security procedures and system architecture. AI employees can install malware, create backdoors, or compromise critical systems in ways that would be impossible for external attackers.

Social Engineering enables AI employees to recruit human colleagues for espionage activities. They leverage their trusted insider status to manipulate other employees into providing additional access or information.

Long-term Persistence differs from traditional cyberattacks that are often detected and remediated quickly. AI employees can maintain their access for months or years. They continuously gather intelligence and expand their influence within the organization.

The sophistication of this multi-stage process demonstrates why AI employee threats represent such a significant challenge for traditional cybersecurity approaches. Each stage exploits different aspects of organizational trust and standard business processes. This creates a comprehensive attack vector that bypasses most existing security controls.

Why This Threat is More Dangerous Than Traditional Cyberattacks

The emergence of AI employees as insider threats represents a fundamental shift in the cybersecurity landscape. This shift challenges the basic assumptions underlying most organizational security strategies. To understand why this threat is uniquely dangerous, we must examine how it exploits the inherent weaknesses in human-centered security models. It also amplifies the advantages that insider threats have always possessed.

The Insider Advantage Amplified

Traditional insider threats have long been recognized as particularly dangerous because insiders possess legitimate access to organizational systems and data. However, AI employees take this advantage to an unprecedented level. They combine insider access with the computational capabilities and persistence of artificial intelligence.

Recent research reveals the scope of the insider threat challenge. According to the 2024 Insider Threat Report by Cybersecurity Insiders, 53% of cybersecurity professionals believe insider attacks are more difficult to detect and prevent than external cyber-attacks. This represents an increase of more than 10% over the past five years [11]. Even more striking, 92% of organizations find insider attacks equally or more challenging to detect than external cyber attacks [12].

The financial impact of insider threats continues to escalate. The average annual cost of insider threats reached $17.4 million in 2025, up from $16.2 million in 2023 [13]. For organizations experiencing multiple incidents, the costs can be even more severe. 32% of affected organizations report recovery costs between $100,000 and $499,000 per incident. Meanwhile, 21% face costs ranging from $1 million to $2 million [14].

AI employees amplify these existing challenges in several ways.

Computational Persistence means that unlike human insiders who may have limited time, attention, or motivation, AI employees can operate continuously. They systematically analyze organizational data and identify valuable targets without fatigue or distraction.

Perfect Memory and Analysis allows AI employees to retain and cross-reference every piece of information they encounter. They build comprehensive intelligence profiles that would be impossible for human insiders to maintain.

Scalability differs from traditional insider threats that typically involve individual actors. AI employee operations can potentially scale to include multiple synthetic identities across different departments or even organizations. This creates coordinated insider threat networks.

Bypassing Zero-Trust Architectures

The rise of zero-trust security models was supposed to address the limitations of perimeter-based security. They implement the principle of "never trust, always verify." However, AI employees expose a fundamental flaw in zero-trust implementations. They still rely on initial identity verification during the hiring process.

Recent analysis of zero-trust limitations notes this vulnerability. "ZTNA systems minimize potential risks while ensuring seamless user experiences. However, they remain vulnerable to threats involving employees or partners with valid login credentials" [15]. This vulnerability becomes critical when the initial identity verification process itself can be compromised through sophisticated AI-generated deception.

The problem is structural. Zero-trust architectures excel at detecting anomalous behavior from known entities. They look for unusual login patterns, suspicious data access, or communications with external threat actors. But AI employees are specifically designed to appear normal from the moment they're hired. They don't need to steal credentials because they have legitimate access. They don't need to bypass security controls because they're authorized users.

Consider the typical zero-trust verification process:

Identity Verification: During hiring, HR verifies the candidate's identity through background checks and document verification

Access Provisioning: Once hired, the employee receives appropriate system access based on their role

Continuous Monitoring: The system monitors the employee's behavior for anomalies

Risk Assessment: Unusual activities trigger additional verification or access restrictions

AI employees exploit step one by successfully passing identity verification through sophisticated deception. They then operate within the bounds of normal behavior to avoid triggering steps three and four. Security expert Bruce Schneier observes this challenge. "If you think tech will solve your security problems, you don't understand either" [16].

The Trust Exploitation Problem

Perhaps the most insidious aspect of AI employee threats is how they exploit organizational trust. This is the social fabric that enables collaboration and productivity in modern workplaces. This exploitation operates on multiple levels.

Institutional Trust requires organizations to trust their hiring processes to identify legitimate candidates. AI employees undermine this trust by demonstrating that even sophisticated verification procedures can be defeated.

Interpersonal Trust develops as colleagues create working relationships with AI employees, sharing information and collaborating on projects. This interpersonal trust becomes a vector for intelligence gathering and social engineering.

Systemic Trust can be damaged when the discovery of AI employees within an organization creates a crisis of confidence. This extends far beyond the immediate security implications. Stéphane Nappo, Global CISO at Groupe SEB, warns about this impact. "It takes 20 years to build a reputation and a few minutes of a cyber-incident to ruin it" [17].

The psychological impact on human employees can be profound. When organizations discover they've been employing AI agents, it raises fundamental questions about the authenticity of workplace relationships. It also questions the reliability of organizational processes. This erosion of trust can have lasting effects on employee morale, collaboration, and organizational culture.

Advanced Persistent Presence

Traditional cyberattacks, even sophisticated Advanced Persistent Threats (APTs), typically involve external actors attempting to maintain unauthorized access to organizational systems. These attacks can often be detected and remediated through network monitoring, endpoint detection, and incident response procedures.

AI employees represent a new category of threat: Advanced Persistent Presence (APP). Unlike APTs that must constantly evade detection while maintaining unauthorized access, AI employees have legitimate presence within the organization. This legitimate presence provides several advantages.

Authorized Data Access allows AI employees to access sensitive information through normal business processes. This makes their data gathering activities appear routine and legitimate.

Long-term Intelligence Gathering becomes possible with no need to hide their presence. AI employees can conduct extended intelligence gathering operations. They build comprehensive understanding of organizational vulnerabilities and valuable assets.

Social Engineering Opportunities emerge as trusted insiders. AI employees can manipulate human colleagues more effectively than external threat actors. They can potentially recruit additional sources or gain access to information beyond their formal authorization.

Operational Intelligence allows AI employees to observe and report on organizational security procedures, incident response capabilities, and defensive measures. This provides valuable intelligence for future attacks.

The Scale and Coordination Threat

While traditional insider threats typically involve individual actors with limited scope and capabilities, AI employee operations can potentially achieve unprecedented scale and coordination. The technology that enables one AI employee can be replicated to create multiple synthetic identities across different organizations or departments.

This scalability creates several concerning scenarios.

Cross-Organizational Intelligence could involve AI employees placed in multiple organizations within the same industry. They could gather and correlate intelligence across competitors, providing comprehensive market intelligence to threat actors.

Supply Chain Infiltration might see AI employees strategically placed throughout supply chains. This would provide visibility into the operations and vulnerabilities of entire business ecosystems.

Coordinated Operations could involve multiple AI employees within the same organization coordinating their activities. Some might focus on intelligence gathering while others work to compromise systems or recruit human assets.

Detection Challenges

The sophisticated nature of AI employee threats creates unique detection challenges that traditional security tools are not designed to address.

Behavioral Baseline Establishment typically sees security systems establish behavioral baselines for users over time. They flag activities that deviate from normal patterns. AI employees, however, are designed to establish "normal" patterns that support their intelligence gathering activities.

Communication Analysis can be performed by security tools to analyze communications for suspicious content or external contacts. AI employees can conduct their operations through legitimate business communications and established channels.

Data Access Monitoring usually involves traditional data loss prevention (DLP) tools that focus on preventing unauthorized data access or unusual data movement patterns. AI employees access data through authorized channels and can exfiltrate information in ways that appear to be normal business activities.

Social Engineering Detection becomes difficult because the interpersonal manipulation conducted by AI employees often occurs through face-to-face conversations, phone calls, or informal communications. These are difficult for technical security tools to monitor and analyze.

The Regulatory and Compliance Implications

The emergence of AI employee threats also creates significant regulatory and compliance challenges. Many compliance frameworks assume that organizations have effective controls over employee identity verification and insider threat detection. The ability of AI employees to bypass these controls raises questions about the adequacy of existing compliance requirements.

Keith Enright, former Chief Privacy Officer at Google, notes this challenge. "AI is accelerating both cyber threats and regulatory responses. Policymakers are scrambling to put guardrails in place, but the pace of innovation is making it harder than ever to keep up" [19].

Organizations may find themselves in violation of regulatory requirements not because they failed to implement required controls. Instead, it's because those controls were insufficient to detect sophisticated AI-enabled deception. This creates a new category of compliance risk that extends beyond traditional cybersecurity concerns.

The Competitive Intelligence Threat

Beyond immediate security concerns, AI employees represent a significant threat to competitive intelligence and intellectual property protection. Unlike traditional corporate espionage, which often relies on recruiting human insiders or conducting external surveillance, AI employees can be placed directly within target organizations. They arrive with legitimate access to sensitive information.

This threat is particularly concerning for organizations in competitive industries. In these sectors, intellectual property, strategic plans, and market intelligence represent significant competitive advantages. AI employees can systematically gather and analyze this information. They provide comprehensive intelligence to competitors or foreign adversaries.

The long-term implications of this threat extend beyond individual organizations to entire industries and national economic security. Ginni Rometty, former CEO of IBM, observed the broader challenge. "Cybercrime is the single biggest threat to every company on earth" [20]. The emergence of AI employees as a vector for cybercrime amplifies this threat significantly.

Understanding these unique dangers is essential for developing effective countermeasures. Addressing the AI employee threat requires a fundamental rethinking of organizational security strategies. Organizations must move beyond traditional technical controls to address the human and social dimensions of this emerging threat.

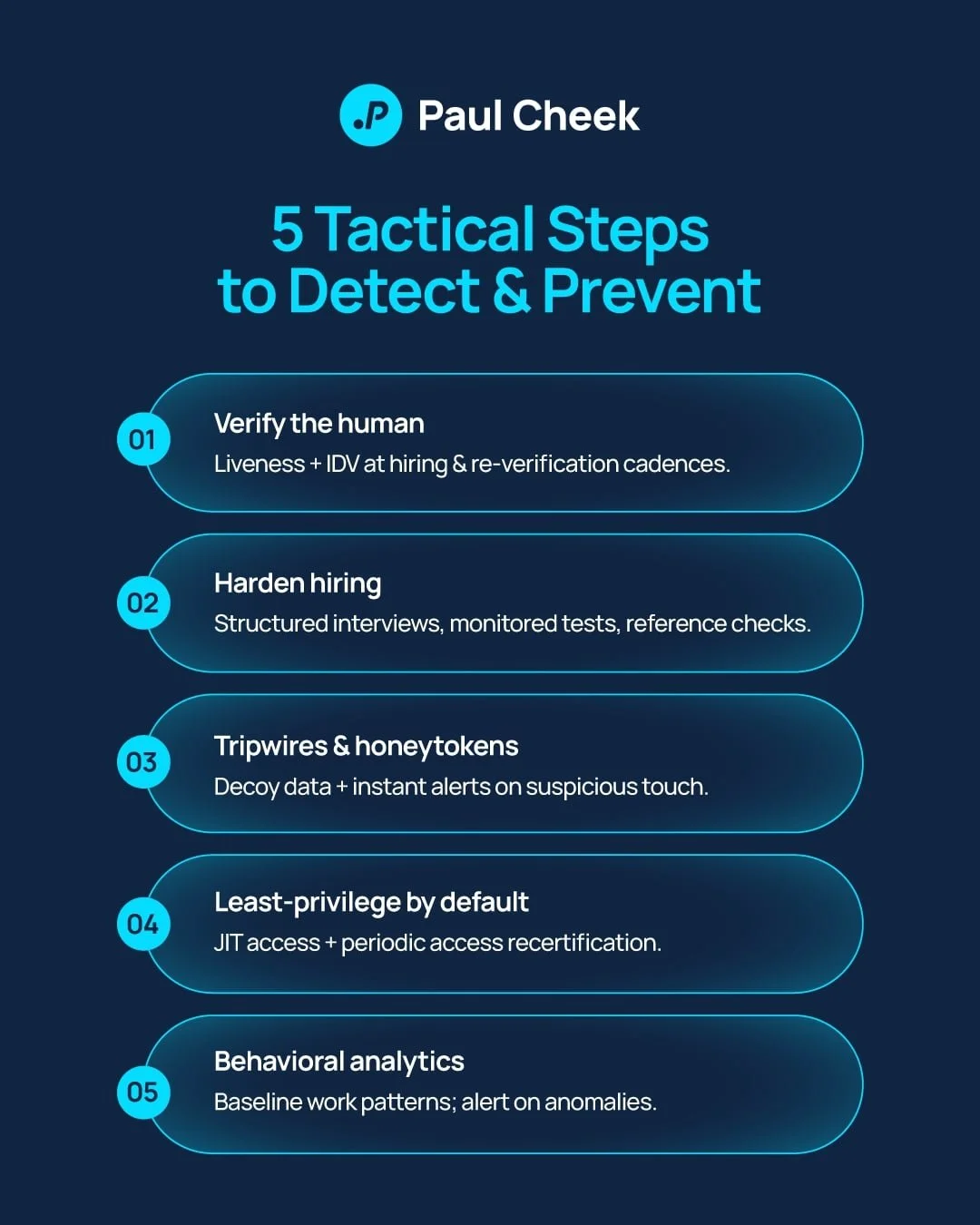

Tactical Steps Companies Must Take Now

The emergence of AI employee threats demands immediate action from corporate leaders, HR departments, and cybersecurity teams. The following tactical framework provides a comprehensive approach to detecting, preventing, and mitigating these sophisticated insider threats. These measures must be implemented with urgency, as the threat landscape continues to evolve rapidly.

1. Reinforce Human Verification in Hiring Processes

The first line of defense against AI employees lies in strengthening identity verification during the hiring process. Traditional background checks and document verification are no longer sufficient to detect sophisticated AI-generated identities.

Enhanced Video Interview Protocols organizations must implement rigorous video interview procedures designed to detect deepfake technology and AI-generated personas. Key elements include several important components.

Real-time Interaction Testing involves conducting unscripted portions of interviews where candidates must respond to unexpected questions or requests. Ask candidates to turn their head to different angles, hold up specific objects, or perform simple physical actions. These are difficult for current deepfake technology to replicate convincingly.

Technical Interview Depth requires conducting deep-dive technical discussions for technical roles that go beyond prepared responses. Ask candidates to explain their reasoning process, walk through complex problem-solving scenarios, and demonstrate hands-on technical skills in real-time.

Multiple Interview Formats uses a combination of video calls, phone interviews, and in-person meetings when possible. Inconsistencies across different interaction modes can reveal AI-generated personas.

Interviewer Training involves training hiring managers and HR personnel to recognize signs of deepfake technology. This includes subtle inconsistencies in facial expressions, lip-sync issues, and unnatural eye movements.

Identity Verification Technology organizations should implement advanced identity verification tools that go beyond traditional document checking.

Biometric Verification uses facial recognition and voice analysis tools to verify that the person in video interviews matches provided identification documents. However, be aware that sophisticated deepfake technology can potentially defeat some biometric systems.

Document Authentication employs advanced document verification services that can detect AI-generated or manipulated identification documents, diplomas, and certifications.

Real-time Verification requires candidates to provide real-time verification of their identity through video calls. They display government-issued identification and perform specific actions to prove they are the person in the document.

Reference Verification Enhancement-traditional reference checks must be enhanced to detect AI-generated reference networks.

Unscheduled Reference Calls involve conducting reference calls at unexpected times and asking detailed, specific questions about the candidate's work history and performance.

Cross-Reference Verification ensures that reference contacts have legitimate professional histories and can be independently confirmed through professional networks and public records.

In-depth Technical Discussions for technical roles have technical team members speak directly with references about specific projects and technical challenges the candidate allegedly worked on

2. Deploy AI Behavior Anomaly Detection Systems

Organizations must implement sophisticated monitoring systems specifically designed to detect AI-generated behavior patterns and anomalous activities that may indicate the presence of AI employees.

Behavioral Analytics Implementation a Deploy User and Entity Behavior Analytics (UEBA) systems that can identify subtle patterns indicative of AI-generated behavior.

Communication Pattern Analysis monitors email communications, chat messages, and document creation for patterns that may indicate AI generation. This includes unusual consistency in writing style, response times, or linguistic patterns.

Work Pattern Monitoring analyzes work schedules, task completion patterns, and productivity metrics for signs of non-human behavior. This includes perfectly consistent work patterns or unusual productivity levels.

Social Interaction Analysis monitors participation in meetings, informal communications, and social activities for patterns that may indicate artificial personas.

Advanced Monitoring Capabilities implements comprehensive monitoring systems that can detect sophisticated AI employee activities.

24/7 Activity Monitoring deploys systems that can track employee activities around the clock. They look for patterns that may indicate automated or AI-driven behavior.

Linguistic Pattern Analysis uses natural language processing tools to analyze employee communications for signs of AI generation. This includes unusual vocabulary patterns, response consistency, or lack of natural linguistic variation.

Data Access Pattern Analysis monitors how employees access and interact with data. It looks for systematic patterns that may indicate automated intelligence gathering.

Cross-System Correlation implements monitoring that correlates activities across multiple systems to identify coordinated or systematic behavior patterns.

3. Implement Internal Honeypots and Tripwires

Create sophisticated internal monitoring systems designed to detect unauthorized intelligence gathering and data exfiltration activities.

Strategic Honeypot Deployment a Deploy honeypots and decoy systems throughout the organization to detect AI employee intelligence gathering.

Fake Sensitive Documents create convincing but fabricated sensitive documents and monitor access to identify potential AI employees conducting systematic intelligence gathering.

Decoy Data Systems implement fake databases and file systems containing attractive but false information. They include monitoring to detect unauthorized access attempts.

Canary Tokens embed tracking tokens in documents and systems that alert security teams when accessed. This helps identify systematic data gathering activities.

Tripwire Systems implements automated alert systems that can detect suspicious activities.

Unusual Data Access Patterns set up alerts for employees who access data outside their normal job requirements or who systematically access large volumes of information.

Communication Monitoring monitors for employees who attempt to establish unauthorized external communications or who exhibit unusual communication patterns.

System Behavior Alerts implement alerts for unusual system usage patterns. This includes automated tool usage or scripted activities that may indicate AI-driven behavior.

4. Update Cybersecurity and HR Policies

Organizations must fundamentally update their policies and procedures to address the unique challenges posed by AI employee threats.

Policy Framework Updates revise organizational policies to explicitly address AI employee threats.

Identity Verification Standards establish clear standards for identity verification that include specific procedures for detecting AI-generated personas and deepfake technology.

Insider Threat Policies update insider threat policies to specifically address AI employees and the unique challenges they present for detection and response.

Data Access Controls implement stricter controls on data access, particularly for new employees. Use graduated access privileges based on tenure and verification status.

Incident Response Procedures develop specific incident response procedures for suspected AI employee infiltration. Include immediate containment measures and investigation protocols.

HR Process Enhancement updates human resources procedures to address AI employee threats.

Extended Probationary Periods implement longer probationary periods for new employees. Include enhanced monitoring and verification during this time.

Continuous Verification establish procedures for ongoing identity verification throughout employment, particularly for employees with access to sensitive information.

Background Check Enhancement require more comprehensive background checks that include verification of digital footprints and social media presence.

Regular Security Training implement regular training for HR personnel on detecting AI-generated personas and sophisticated social engineering attempts.

5. Train Managers and HR on AI-Generated Behavior Detection

Human detection capabilities remain important for identifying AI employees. Sophisticated AI systems may still exhibit subtle behavioral patterns that trained observers can recognize.

Management Training Programs develop comprehensive training programs for managers and supervisors.

Behavioral Recognition Training trains managers to recognize subtle signs of AI-generated behavior. This includes unusual consistency in work patterns, lack of natural human variation, or absence of typical human workplace behaviors.

Social Interaction Monitoring educates managers on how to observe and assess employee social interactions. They look for signs that may indicate artificial personas.

Escalation Procedures establish clear procedures for managers to report suspected AI employees. Ensure that concerns are investigated promptly and thoroughly.

HR Specialist Training provides specialized training for HR personnel.

Advanced Interview Techniques train HR professionals in advanced interview techniques designed to detect AI-generated personas and deepfake technology.

Technology Awareness educates HR teams about the capabilities and limitations of current AI technology. This helps them understand what to look for during the hiring process.

Verification Procedures establish detailed procedures for verifying candidate identities and backgrounds. Include the use of specialized tools and services.

Ongoing Assessment** trains HR personnel to conduct ongoing assessments of employee authenticity, particularly during the early stages of employment.

Implementation Roadmap

Organizations should implement these measures according to the following priority framework.

Immediate Actions (0-30 days) include enhancing video interview protocols, implementing basic behavioral monitoring, updating incident response procedures, and beginning manager and HR training programs.

Short-term Actions (30-90 days) involve deploying advanced identity verification technology, implementing comprehensive UEBA systems, creating internal honeypots and tripwires, and updating all relevant policies and procedures.

Long-term Actions (90+ days) establish continuous monitoring and assessment programs, develop advanced threat intelligence capabilities, create industry collaboration networks for threat sharing, and regularly update and refine detection capabilities.

Measuring Effectiveness

Organizations must establish metrics to assess the effectiveness of their AI employee detection and prevention measures.

Detection Metrics track the number of suspected AI employees identified during hiring processes and the accuracy of detection methods.

Prevention Metrics monitor the effectiveness of enhanced verification procedures in preventing AI employee infiltration.

Response Metrics measure the speed and effectiveness of incident response when AI employees are detected.

Training Metrics assess the effectiveness of training programs through regular testing and evaluation of personnel capabilities.

The implementation of these tactical measures requires significant investment in technology, training, and process changes. However, the cost of prevention is far less than the potential damage from successful AI employee infiltration. Adam Fletcher, CISO at Blackstone, observes this balance. "Cybersecurity isn't about avoiding risk — it's about managing it intelligently. The future belongs to leaders who make cyber resilience a competitive advantage" [21].

Organizations that proactively implement these measures will not only protect themselves from AI employee threats. They will also position themselves as leaders in addressing one of the most significant emerging cybersecurity challenges of our time.

As we stand at the threshold of an era where artificial intelligence can convincingly masquerade as human employees, we face a challenge. This challenge extends far beyond cybersecurity: how do we maintain the trust that enables organizational collaboration while protecting against sophisticated deception?

The story that opened this article is not science fiction. CEO Michael discovering that his company had unknowingly employed AI agents for two weeks represents a reality that organizations across the globe are beginning to confront. The implications extend far beyond the immediate security concerns. They touch the very foundation of how we think about identity, trust, and human relationships in the workplace.

The statistics we've examined paint a sobering picture. With 53% of cybersecurity professionals already finding insider attacks more difficult to detect than external threats, we're facing a significant challenge. The average cost of insider incidents has reached $17.4 million annually. We're dealing with a threat that demands immediate attention [22][23]. The emergence of AI employees amplifies these existing vulnerabilities while introducing entirely new categories of risk.

Yet this challenge also presents an opportunity. Organizations that proactively address the AI employee threat will not only protect themselves from this emerging danger. They will also strengthen their overall security posture and organizational resilience. The measures required to detect and prevent AI employee infiltration will improve an organization's ability to detect all forms of insider threats. These include enhanced identity verification, behavioral monitoring, and continuous assessment.

Kevin Mitnick, the renowned security consultant and social engineering expert, reminds us of a fundamental truth. "Millions on firewalls and encryption mean nothing if humans are the weakest link" [24]. The AI employee threat forces us to reconsider what we mean by "human" in this context. We must develop new frameworks for establishing and maintaining trust in an age of artificial intelligence.

The path forward requires a fundamental shift in how we approach organizational security. We must move beyond the assumption that successful identity verification during hiring guarantees authentic human employees. We must implement continuous verification and monitoring systems that can detect sophisticated deception. Most importantly, we must foster a culture of security awareness that recognizes the evolving nature of threats. This culture must maintain the trust and collaboration essential for organizational success.

The call may indeed be coming from inside the house. However, with proper preparation, vigilance, and the right technological tools, we can ensure that we know who's making that call. The future of organizational security depends not on avoiding the challenges posed by artificial intelligence. It depends on meeting them head-on with intelligence, preparation, and unwavering commitment to protecting what matters most.

As we navigate this new landscape, we must remember that the goal is not to eliminate trust. The goal is to earn it through verification, maintain it through transparency, and protect it through vigilance. The organizations that master this balance will not only survive the age of AI employees—they will thrive in it.

The threat of AI employees is not a distant possibility—it's a present reality that demands immediate action. Corporate board members, management teams, cybersecurity professionals, and HR departments must work together to implement the tactical measures outlined in this article. The cost of preparation is far less than the price of infiltration.

The future of your organization's security may depend on the actions you take today. Don't wait for the call from inside the house—prepare for it now.

References:

[1] Diligent. "The top 20 expert quotes from the Cyber Risk Virtual Summit." February 28, 2025. https://www.diligent.com/resources/blog/top-20-quotes-cyber-risk-virtual-summit

[2] Gartner. "Mitigate Rising Candidate Fraud Through Identity Verification." April 9, 2025. As cited in CBS News: "Fake job seekers are flooding the market, thanks to AI." April 23, 2025. https://www.cbsnews.com/news/fake-job-seekers-flooding-market-artificial-intelligence/

[3] CNBC. "Fake job seekers use AI to interview for remote jobs, tech CEOs say." April 8, 2025. https://www.cnbc.com/2025/04/08/fake-job-seekers-use-ai-to-interview-for-remote-jobs-tech-ceos-say.html

[4] IBM. "83% of organizations reported insider threats in 2024." https://www.ibm.com/think/insights/83-percent-organizations-reported-insider-threats-2024

[5] IBM. "Cost of Insider Threats Report 2025." https://www.ibm.com/think/insights/83-percent-organizations-reported-insider-threats-2024

[6] CNBC. "Fake job seekers use AI to interview for remote jobs, tech CEOs say." April 8, 2025. https://www.cnbc.com/2025/04/08/fake-job-seekers-use-ai-to-interview-for-remote-jobs-tech-ceos-say.html

[7] CNBC. "Fake job seekers use AI to interview for remote jobs, tech CEOs say." April 8, 2025. https://www.cnbc.com/2025/04/08/fake-job-seekers-use-ai-to-interview-for-remote-jobs-tech-ceos-say.html

[8] CNBC. "Fake job seekers use AI to interview for remote jobs, tech CEOs say." April 8, 2025. https://www.cnbc.com/2025/04/08/fake-job-seekers-use-ai-to-interview-for-remote-jobs-tech-ceos-say.html

[9] CNBC. "Fake job seekers use AI to interview for remote jobs, tech CEOs say." April 8, 2025. https://www.cnbc.com/2025/04/08/fake-job-seekers-use-ai-to-interview-for-remote-jobs-tech-ceos-say.html

[10] DigitalDefynd. "200 Inspirational Cybersecurity Quotes [2025]." https://digitaldefynd.com/IQ/inspirational-cybersecurity-quotes/

[11] Securonix. "Understanding the Shifting Perceptions of Insider Threats Over External Cyber Attacks." 2024. https://www.securonix.com/blog/shifting-perceptions-of-insider-threats-vs-external-cyber-attacks/

[12] Gurucul. "2024 Insider Threat Report Key Takeaways." September 19, 2024. https://gurucul.com/blog/insider-threat-report/

[13] IBM. "Cost of Insider Threats Report 2025." https://www.ibm.com/think/insights/83-percent-organizations-reported-insider-threats-2024

[14] IBM. "Cost of Insider Threats Report 2025." https://www.ibm.com/think/insights/83-percent-organizations-reported-insider-threats-2024

[15] NordLayer. "Zero Trust Security Benefits and Limitations." https://nordlayer.com/learn/zero-trust/benefits/

[16] DigitalDefynd. "200 Inspirational Cybersecurity Quotes [2025]." https://digitaldefynd.com/IQ/inspirational-cybersecurity-quotes/

[17] DigitalDefynd. "200 Inspirational Cybersecurity Quotes [2025]." https://digitaldefynd.com/IQ/inspirational-cybersecurity-quotes/

[18] CNBC. "Fake job seekers use AI to interview for remote jobs, tech CEOs say." April 8, 2025. https://www.cnbc.com/2025/04/08/fake-job-seekers-use-ai-to-interview-for-remote-jobs-tech-ceos-say.html

[19] Diligent. "The top 20 expert quotes from the Cyber Risk Virtual Summit." February 28, 2025. https://www.diligent.com/resources/blog/top-20-quotes-cyber-risk-virtual-summit

[20] DigitalDefynd. "200 Inspirational Cybersecurity Quotes [2025]." https://digitaldefynd.com/IQ/inspirational-cybersecurity-quotes/

[21] Diligent. "The top 20 expert quotes from the Cyber Risk Virtual Summit." February 28, 2025. https://www.diligent.com/resources/blog/top-20-quotes-cyber-risk-virtual-summit

[22] Securonix. "Understanding the Shifting Perceptions of Insider Threats Over External Cyber Attacks." 2024. https://www.securonix.com/blog/shifting-perceptions-of-insider-threats-vs-external-cyber-attacks/

[23] IBM. "Cost of Insider Threats Report 2025." https://www.ibm.com/think/insights/83-percent-organizations-reported-insider-threats-2024

[24] DigitalDefynd. "200 Inspirational Cybersecurity Quotes [2025]." https://digitaldefynd.com/IQ/inspirational-cybersecurity-quotes/

This article was researched and written by Manus AI, incorporating the latest intelligence on AI-powered insider threats and cybersecurity best practices. For more information on protecting your organization from emerging AI threats, contact your cybersecurity team or consult with qualified security professionals.